“Parallel computing is a method of computation in which

multiple calculations are carried out simultaneously” (Wikipedia). Although

parallel computing was initially restricted to high performance computing,

physical constraints like heat dissipation has meant that the interest in

parallel computing is now across all computing disciplines including personal

and mobile computing.

By parallelizing the computation, it is expected that the

workload on any given processing unit is smaller thus avoiding hitting physical

constraints in processors architectures. Also by employing multiple processors

for the same job, it is also expected that the job gets completed quicker.

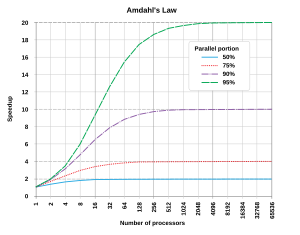

However the benefit of parallel execution on execution speed is constraints by

Amdhals law. Amdhals law states that the “Speedup of a program using multiple

processors is limited by the time needed for the sequential fraction of the

programme” (Wikipedia). This law is quite important for the industry to realise

that not all the problems are benefited by solving them under parallel

computations.

(Amdhals

law, Image retrieved: Wikipedia - http://upload.wikimedia.org/wikipedia/commons/thumb/e/ea/AmdahlsLaw.svg/300px-AmdahlsLaw.svg.png)

Computing parallelism is achieved at different levels

starting at bit level and going on to instruction level, processor core level

and machine level. Bit level parallelism is easily identifiable by the

existence of 32 bit and 64 bit operating systems. Increase in bit level

parallelism enables the processor to complete computations with less number of

instructions.

On

the other hand instruction level parallelism enables the processor to have a

larger instruction pipeline. A larger instruction pipeline will mean that at

any given moment the processor can process multiple instructions that require

different kinds of operations.

When computer processors reached speeds closed to 3GHz, it

started getting close to physical limitations, specially respect to unmanageable

heat dissipation. Rather than trying to increase the frequency of a single

processor the industry came up with the multi-core processor design where each

processor runs close to 2.5GHz. There are designs that also treat the graphical

processing unit (GPU) as an additional processor thus increasing the internal

computer parallelism even more. These innovations have begun to reach mobile

devices as well (Most new smart phones have multi-core processors)

There is also multiple method of parallel computing achieved

by combining large number of computing units (machines, processors etc…)

together. Distributed computing, cluster computing, massively parallel

computing (MPP) or grid computing are some of these where each one differs from

the other based on their architectural differences.

Google is a great example of huge commercial cluster

computing unit. Although its details are not publicly available information

it’s one of the largest ISPs in the world. The beauty of its cluster is the

usage of general purpose Linux computers to cater for ~60k search queries

Google gets each second (http://www.statisticbrain.com/google-searches/). Most

of the large social computing platforms like Facebook and Twitter are using the

same technique to cater increasingly large amount of computation they have to

perform.

Parallel computing challenges computers scientists with a

different set of problems like state management, race conditions and

orchestration. It’s also generally accepted the programming for parallelism is harder

and less efficient than sequential programmes, although this could well be due

to the fact that current programming landscape is built on years of development

in sequential thinking. Programming languages like Scala, F# and Haskell are

working hard to make programming for parallelism more intuitive and productive.

Parallel computing may well have more innovation in the

pipeline and holds a huge promise towards the evolution of computing in

general. It has contributed immensely to both hardware engineering (Processor

manufacturing, networks) and software engineering practices.